Customers don’t want to have to sweat about the temperature in their data centers and today we’ve introduced a new solution that will feel like a breath of fresh air.

Our newly integrated data center solution enables customers to confidently operate facilities at higher temperatures, even without chillers. Tested, validated and warrantied to operate within the highest current temperature and humidity guidelines issued by ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers), the servers, storage and networking equipment of the Dell Fresh Air cooling solution are capable of short-term, excursion-based operation in temperatures up to 113 degrees Fahrenheit (45 degrees Celsius), the highest temperature warrantied in mainstream servers in the industry.

The benefits of our data center technologies with Fresh Air capability include aggressive improvements in energy consumption and the resulting operational costs, even in data centers that have already been economized with respect to cooling. Customers can save over $100K per year per megawatt of IT in their datacenters.

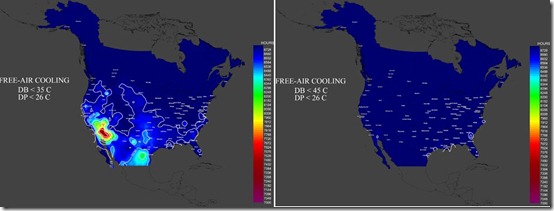

Left picture shows 35C or less (dark blue chillerless opportunity currently).

Right picture shows chillerless with Dell’s new offering.

New data center construction exemplified by companies such as Facebook, Google, and Yahoo has demonstrated a shift toward fresh air-cooled data centers that do not rely on chiller technology. However, the standard allowable temperature maximum of 95 F (35 C) for today’s IT equipment limits the locations where they can be used without having to have a backup chiller facility for high temperature excursions.

To meet the needs of a broader range of customers interested in employing more efficient and economical facility designs, we have designed and validated a portfolio of servers, storage, networking and power infrastructure that deliver short-term, excursion-based operation with limited impact on performance across a larger environmental window.

Dell systems have been developed for sustained operation at temperature ranges from minus 23 F (5 C) to 113 F (45 C) and allowable humidity from 5 percent to 90 percent. This level of design robustness was the result of years of research into IT system reliability and the effects of various types of environmental conditions. That research led to numerous improvements in our products, components, materials, and designs along with a new set of reliability validation tests. The result is products that can tolerate up to 900 hours of 104 F (40 C) operation per year and up to 90 hours at 113 F (45 C).

Deploying Dell servers, storage units or network switches with Fresh Air capability gives customers greater flexibility for the operational temperature in the data center, a best practice that can help increase energy efficiency and decrease operational costs. In some climates, the capital cost to build a chiller plant as part of the data center facility can be eliminated altogether.

Using this approach, our customers can achieve more than $100K of operational savings per megawatt (MW) of IT and potential elimination of capital expenditures of approximately $3M per MW of IT. In addition, IT systems that can tolerate higher temperatures can reduce the risk of IT failures during facility cooling outages.

Dell is taking the lead in driving data center efficiencies, not only with ASHRAE, but with other emerging initiatives including our industry-leading Modular Data Center products where our DCS team is setting a new benchmark for overall data center performance and efficiency.

Do you have a question or comment about how the Fresh Air cooling solution can benefit our customers? Post it here in the comments and join the conversation.

See related press-release here.

Additional Information

Dell Data Center Efficiency

Dell Power & Cooling

Dell PowerEdge Servers

Dell EqualLogic Storage

Dell PowerConnect Managed Gigabit Ethernet Switches

Dell Power Distribution Units